If remote teaching has taught us anything, it is that some meetings definitely could have been emails, and some trainings work better digitally. Distance learning has demonstrated that the traditional method of gathering educators in a large room with gallons of coffee, uncomfortable ice-breakers, and a Power Point is not necessarily the best way to get every type information across.

As educational technology expands into all aspects of professional development, it is essential that instructional coaches take time to reflect on the use of technology and make careful decisions to ensure that use is effective. the TPACK model provides teachers and coaches a visual tool to examine the ways in which technology used in lessons and professional development can best meet the needs of learners.

Given this newly opened horizon with its array methods and mediums for training teachers, academic coaches must evaluate the methods of delivery for lessons or PD to determine whether to do so digitally, in person or via flipped learning/hybrid. These decisions are essential components of ISTE standard 3.3, which is “partner with educators to evaluate the efficacy of digital learning content and tools to inform procurement decisions and adoptions” (2021).

Desimone: Core Features of PD

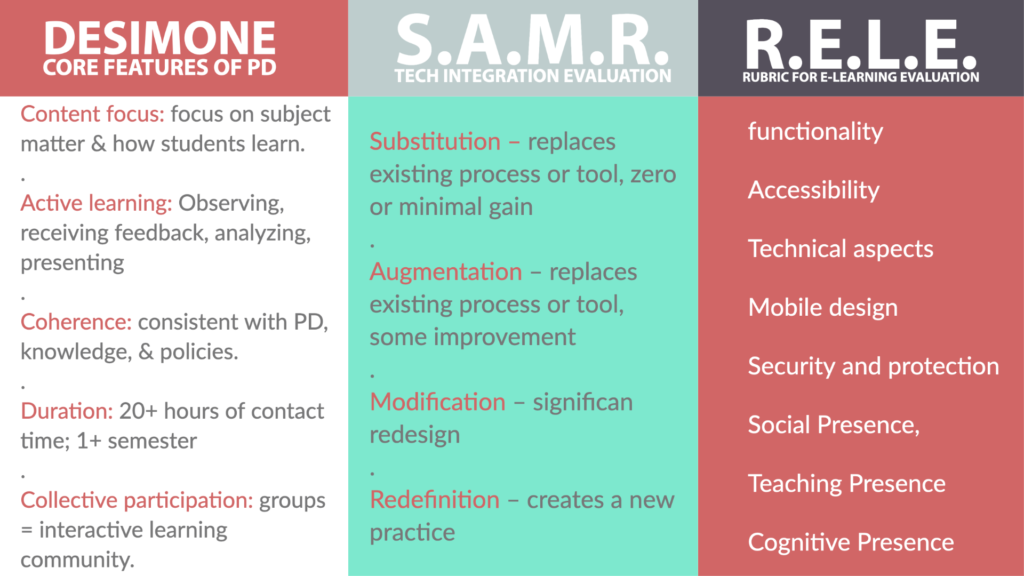

Multiple studies and sources provide methods to determine the elements that make a professional development successful, Desimone suggests a content based approach, saying “to assess the effectiveness of professional development programs, no matter what types of activity they include, we should measure common features that research shows are related to the outcomes we care about” (2011). With this in mind, Desimone proposes that the following core features of effective professional development: Content Focus, Active Learning, Coherence, Duration, Collective Participation (Desimone, 2011). Desimone suggests using a variety of high quality evaluative tools to assess these core features, including observation, interviews and surveys (2011).

SAMR Model

Crawford Thomas identifies Puentedura’s SAMR model as a tool for ongoing evaluation of professional development and learning technologies, saying “SAMR can work as an audit tool to assess how the use of technology is shaping teaching and learning practice” (Crawford-Thomas, 2020). The SAMR model identifies ways in which new technology plays a role in changes to instruction or training. It divides the role of technology into four non-heirarchical categories: substitution (replacement of old technology with little improvement), augmentation (replacement with improvement), modification (use radically shifts instruction), and redefinition (technology results in new practices) (Crawford-Thomas, 2020). By identifying the role that new technology plays in a professional development or lesson, coaches can recommend different technology, or ensure that the technology is used to its fullest potential. For example, zoom technology replaced staff meetings typically held in the library. In doing so, it fit the substitution mode because it caused minimal change or improvement in the quality of trainings: breakout rooms allowed for small group work, and the main room functioned as a lecture space. However, if coaches wished to take greater advantage of the benefits of Zoom’s capabilities, placing zoom in the modification category would entail taking advantage of digital-only features such as direct chat and screen sharing. While SAMR is primarily focused on technology use, the categories are helpful in guiding professional development design choices.

Rubric for e-Learning Evaluation

The Rubric for e-Learning Evaluation at Western University is an extensive rubric that examines multiple facets of digital education tools useful for teachers and instructional coaches. The rubric is divided into sections, that include functionality, accessibility, technical aspects, mobile design, security and protection, social presence, teaching presence, and cognitive presence (Anstey, 2018). Anstey and Watson, the designers of the rubric assert that a tool useful for one type of information acquisition is not necessarily the best tool for a different professional development session, explaining that “e-learning tools should be chosen on a case-by-case basis and should be tailored to each instructor’s intended learning outcomes and planned instructional activities” (Anstey, 2018). The rubric is thorough and can be unwieldy, however a simplified version assessing functionality, accessibility, design and technical aspects would be useful for coaches when choosing technology for instruction.

Resources

Anstey, L., & Watson, G. (2018, September 10). A Rubric for Evaluating E-Learning Tools in Higher Education. EDUCAUSE. https://er.educause.edu/articles/2018/9/a-rubric-for-evaluating-e-learning-tools-in-higher-education

Crawford Thomas, A. (2020, August 12). How the SAMR learning model can help build a post-COVID digital. Jisc. https://www.jisc.ac.uk/blog/how-the-samr-learning-model-can-help-build-a-post-covid-digital-strategy-12-aug-2020

Desimone, L. M. (2011). A Primer on Effective Professional Development. Phi Delta Kappan, 92(6), 68–71. https://doi.org/10.1177/003172171109200616

Guide to Evaluating Professional Development. (2019, October 8). CDC. https://www.cdc.gov/healthyschools/tths/pd_guide.htm

Guskey, T. (2002). Does It Make a Difference? Evaluating Professional Development. Redesigning Professional Development, 59(6), 45–51. http://www.ascd.org/publications/educational-leadership/mar02/vol59/num06/Does-It-Make-a-Difference%C2%A2-Evaluating-Professional-Development.aspx

ISTE Standards for Coaches | ISTE. (2021). ISTE. https://www.iste.org/standards/iste-standards-for-coaches

Kao, C.-P., Tsai, C.-C., & Shih, M. (2014). Development of a Survey to Measure Self-efficacy and Attitudes toward Web-based Professional Development among Elementary School Teachers. Educational Technology & Society, 17 (4), 302–315. https://www.ds.unipi.gr/et&s/journals/17_4/21.pdf

Rebora, A. (2021, April 11). Teachers Still Struggling to Use Tech to Transform Instruction, Survey Finds. Education Week. https://www.edweek.org/technology/teachers-still-struggling-to-use-tech-to-transform-instruction-survey-finds/2016/06?tkn=SUNF3xA1W22FFtjNlbjUg5JOX4Y8vP7i4W5T&intc=es